Sonic Imagination (2021)

SNSF-funded artistic media research project on the use of binaural audio techniques for stimulating intrinsic imagery in the context of speculative scenarios taking place on Freilager-Platz in Basel, Switzerland. In collaboration with Martin Rumori.

We aimed to investigate the idea that virtual sound sources in audio augmented environments are particularly suitable for triggering and directing imaginations within human inner perception. Our assumption was that the binaural listening to imaginary entities at the place of recording (so to speak ‘in-situ’) enhances the imagination in a special way, since sound as a non-visual medium favours the creation of images in human inner perception.

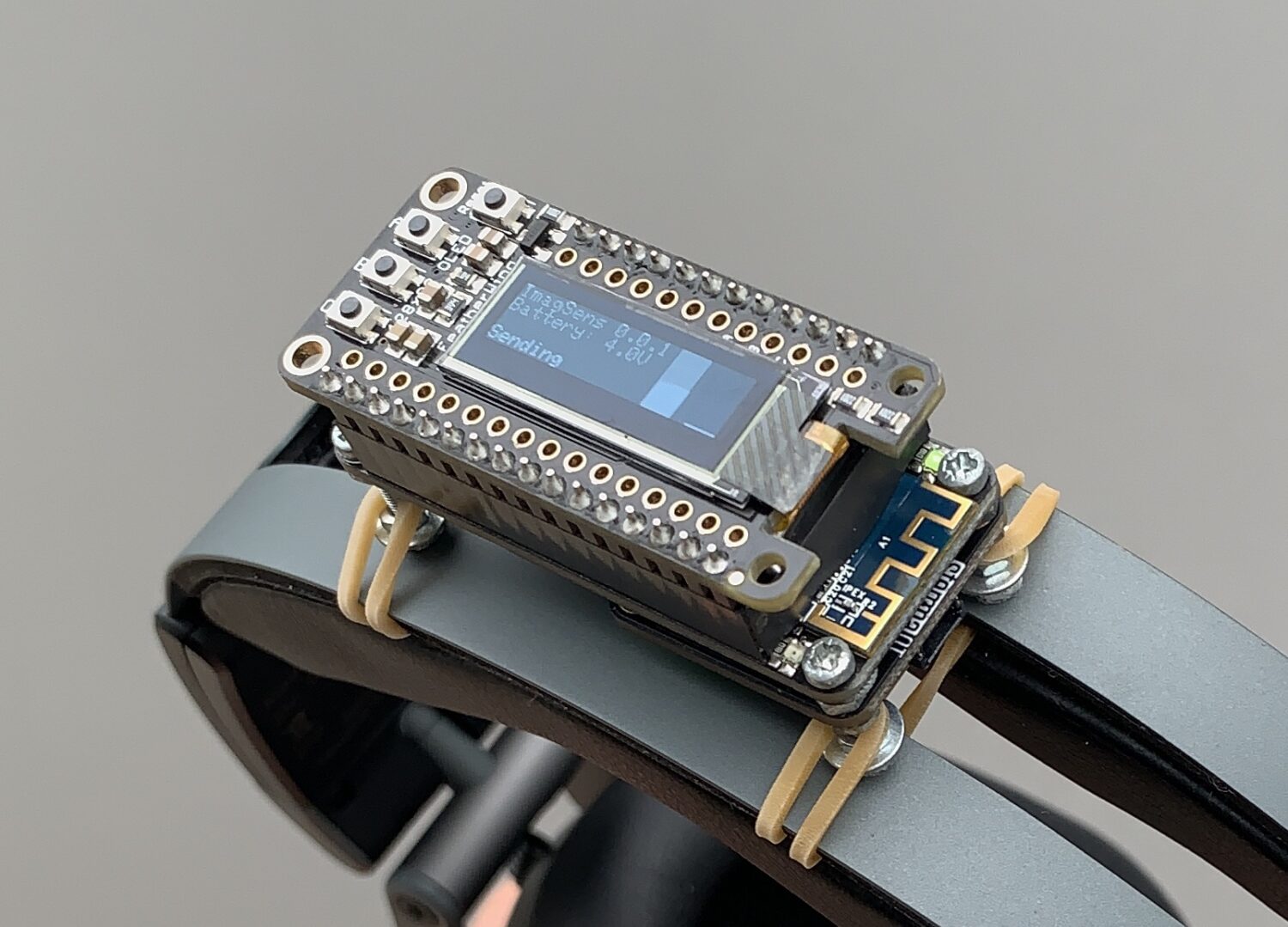

We experimented with building apps for mobile devices using ›Unity 3D‹ as coding framework and ›Google Resonance‹ as binaural spatializer for object based sound. We developed various iterations of large-scale 6-DOF tracking using GPS location services and ready-made head-trackers such as ›Apple AirPods‹. Since these headphones have been lacking an absolute magnetic north, Martin Rumori developed our own head-tracking solution featuring low latency tracking with mentioned absolute magnetic sensing. The corresponding source codes are available at Github. Position-based tracking via GPS turned out to be not precise enough for our needs, and while we therefore investigated using vision and LIDAR-based tracking, we eventually abandoned positional tracking altogether in order to focus on high quality rotational head-tracking and the authentic reconstruction of on-site spatial reverberations.

We looked into simulating spatial reverberations using acoustical ray-tracing, but eventually went for a more classic approach, conducting impulse response measurements on-site at the campus of the HGK FHNW in Basel by bursting balloons, which were captured with a 2nd order Ambisonics microphone. In the next step we produced 3rd order Ambisonics ›audio beds‹ for our scenarios in ›Reaper‹ and played them back on a custom made Unity-based iOS app using the ›Rapture3D‹ Ambisonics spatializer by ›Blue Ripple Sound‹. Unfortunately, due to their licensing terms, this iOS app cannot be shared as open-source. We initially planned to experiment with speaker arrays using crosstalk cancellation as well, but skipped this plan in favour of the above mentioned work on mobile phone applications.

Mass of demonstrators participate in the March on Washington on August 28, 1963 (AP Photo via WTOP).

We developed and juxtaposed two separate scenarios for our aesthetic research that couldn’t be more different: Firstly, in the context of our interest in simulation techniques for participatory urbanism, we sonically reenacted the historic ›I Have A Dream‹ speech by Martin Luther King on the campus. We restored the archived recordings of King’s speech and designed an audio bed using the above mentioned technique in order to offer the impression that the famous speech contra-factually is taking place on our campus in the here and now of the listeners.

Still images taken from the YouTube clip ›Israeli Iron Dome filmed intercepting rockets from Gaza‹ uploaded by the ›The Telegraph‹ in May 2021.

And secondly, in the context of our interest in ›virtual reality‹ trauma therapy and speculative art and design, we reconstructed the activity of the Israeli ›Iron Dome‹ missile interception system during the 2021 conflict with Gaza based on a YouTube video uploaded by ‘The Telegraph’. We want to emphasise that we did not conduct actual trauma therapy research on patients, but instead aimed to conduct fundamental research on media aesthetics in this context.

We presented both scenarios to a group of seven colleagues and students. All of them found the presented audio beds to be very plausible and relatable to the site-specific situation, up to the point that one participant thought the headphones were just a placebo and Martin Luther King’s speech was being played back on large speakers on the campus. The participants found both scenarios emotionally touching, confirming our research hypothesis that such media can indeed be used to stimulate emotions and imaginations. The assessment of the specific content and quality of the evoked mental imagery turned out to be more difficult, not the least because of the volatile and inaccessible nature of such imagery in the first place. In future work, a collaboration with psychologists or neuroscientists would be helpful in this regard, but for our initial interest in researching new forms of media technologies and applications we still see our results to be satisfactory and insightful. Audio excerpts of the recordings of our interviews have been published as open research data with Zenodo. Additionally, a preliminary video documentation of the two on-site scenarios is also available as an open research data download.